Portfolio item number 1

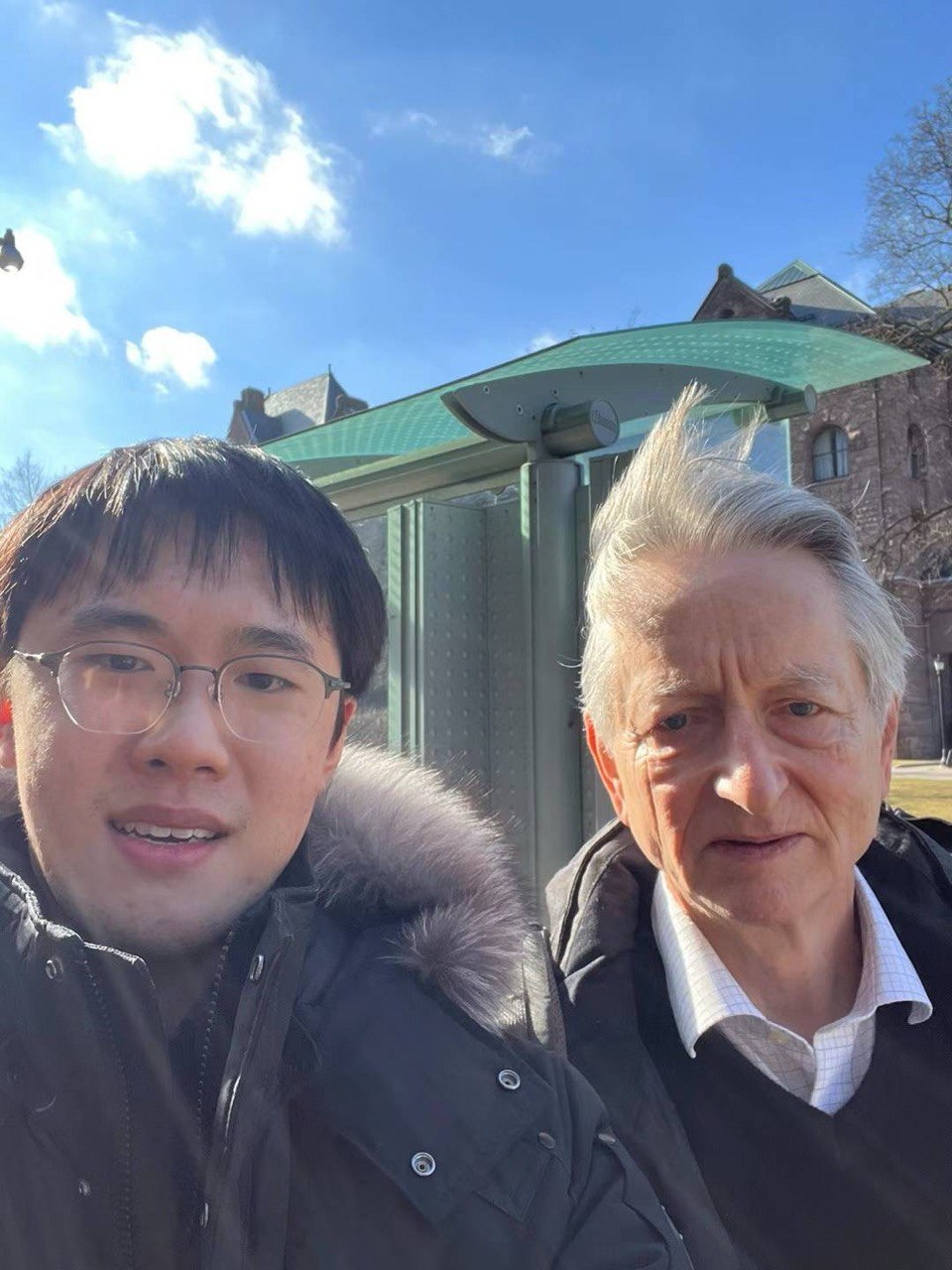

A selfie of me with Geoffrey Hinton, taken on February 9, 2024.

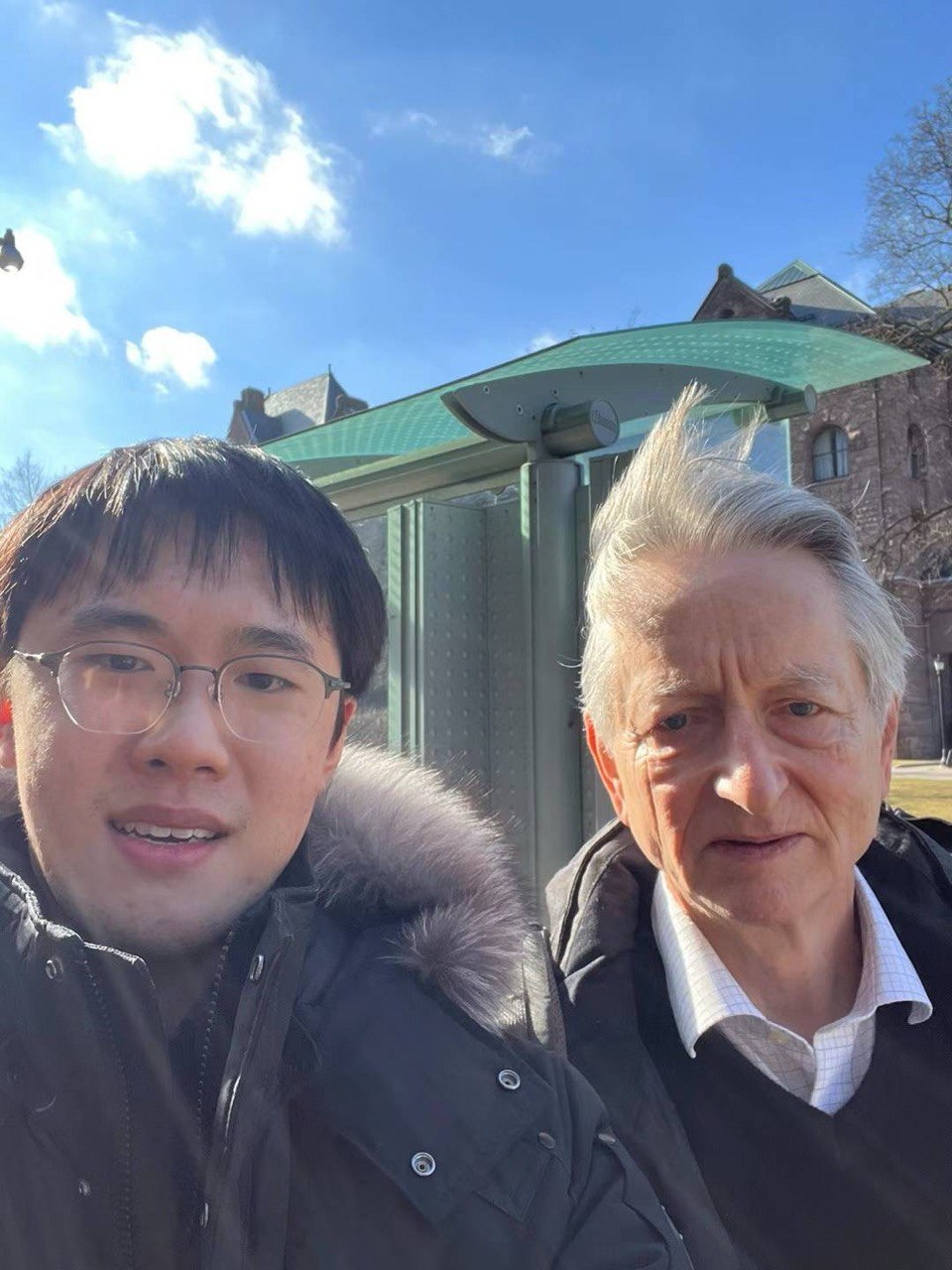

A selfie of me with Geoffrey Hinton, taken on February 9, 2024.

Short description of portfolio item number 2

Published in Journal 1, 2009

This paper is about the number 1. The number 2 is left for future work.

Recommended citation: Your Name, You. (2009). "Paper Title Number 1." Journal 1. 1(1). http://academicpages.github.io/files/paper1.pdf

Published in Journal 1, 2010

This paper is about the number 2. The number 3 is left for future work.

Recommended citation: Your Name, You. (2010). "Paper Title Number 2." Journal 1. 1(2). http://academicpages.github.io/files/paper2.pdf

Published in Journal 1, 2015

This paper is about the number 3. The number 4 is left for future work.

Recommended citation: Your Name, You. (2015). "Paper Title Number 3." Journal 1. 1(3). http://academicpages.github.io/files/paper3.pdf

Published in Journal of Xi'an University of Science and Technology, 2020

Influence of model parameters on transmission behavior of open hollow sphere metamaterials

Recommended citation: Xiaole Yan, Limei Hao, Linfeng Ye, Jiayu Ji, You Xie. (2020). “Influence of model parameters on transmission behavior of open hollow sphere metamaterials” Journal of Xi'an University of Science and Technology https://kns.cnki.net/kcms/detail/detail.aspx?dbcode=CJFD&dbname=CJFDLAST2020&filename=XKXB202003020&uniplatform=NZKPT&v=vqTPDs4aIcs_i7BK5AHswOVrhyMF4NM46yVVJArNE3IPc0c5z_WpNlywMLP_I82j

Published in Canadian Workshop on Information Theory (CWIT), 2022

Despite the great success of deep neural networks (DNNs) in computer vision, they are vulnerable to adversarial attacks. Given a well-trained DNN and an image x , a malicious and imperceptible perturbation ε can be easily crafted and added to x to generate an adversarial example x′ . The output of the DNN in response to x′ will be different from that of the DNN in response to x • To shed light on how to defend DNNs against such adversarial attacks, in this paper, we apply statistical methods to model and analyze adversarial perturbations ε crafted by FGSM, PGD, and CW attacks. It is shown statistically that (1) the adversarial perturbations ε crafted by FGSM, PGD, and CW attacks can all be modelled in the Discrete Cosine Transform (DCT) domain by the Transparent Composite Model (TCM) based on generalized Gaussian (GGTCM); (2) CW attack puts more perturbation energy in the background of an image than in the object of the image, while there is no such distinction for FGSM and PGD attacks; and (3) the energy of adversarial perturbation in the case of CW attack is more concentrated on DC components than in the case of FGSM and PGD attacks.

Recommended citation: L. Ye, E. -h. Yang and A. H. Salamah, Modeling and Energy Analysis of Adversarial Perturbations in Deep Image Classification Security, 2022 17th Canadian Workshop on Information Theory (CWIT), 2022, pp. 62-67, doi: 10.1109/CWIT55308.2022.9817678. https://ieeexplore.ieee.org/abstract/document/9817678

Published:

This is a description of your talk, which is a markdown files that can be all markdown-ified like any other post. Yay markdown!

Published:

This is a description of your conference proceedings talk, note the different field in type. You can put anything in this field.

Workshop, University 1, Department, 2015

This is a description of a teaching experience. You can use markdown like any other post.

Academic Support Team, XUST, ECE, 2017

This is a description of a teaching experience. You can use markdown like any other post.